Meet 'Chinese CLIP,' An Implementation of CLIP Pretrained on Large-Scale Chinese Datasets with Contrastive Learning - MarkTechPost

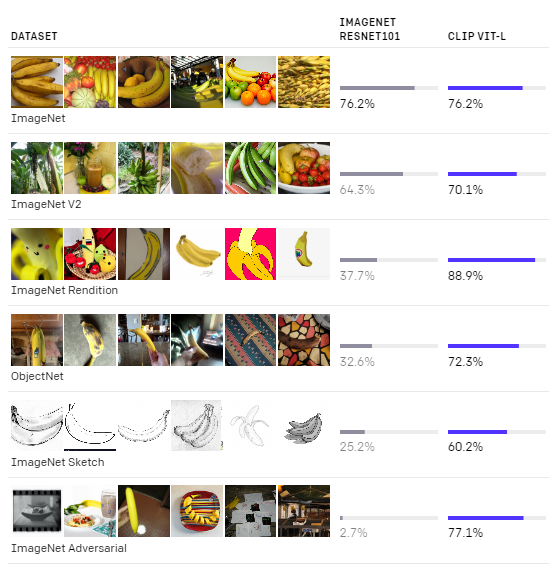

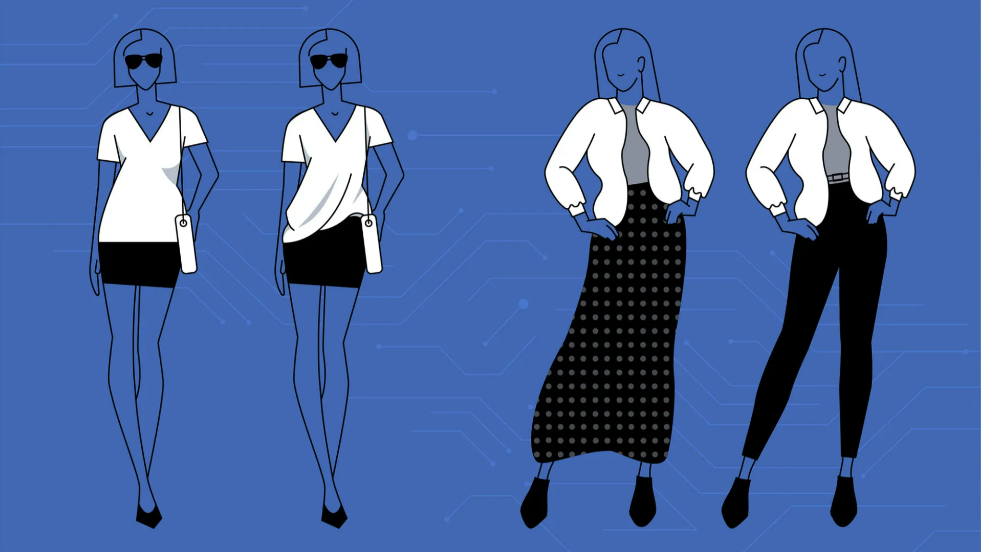

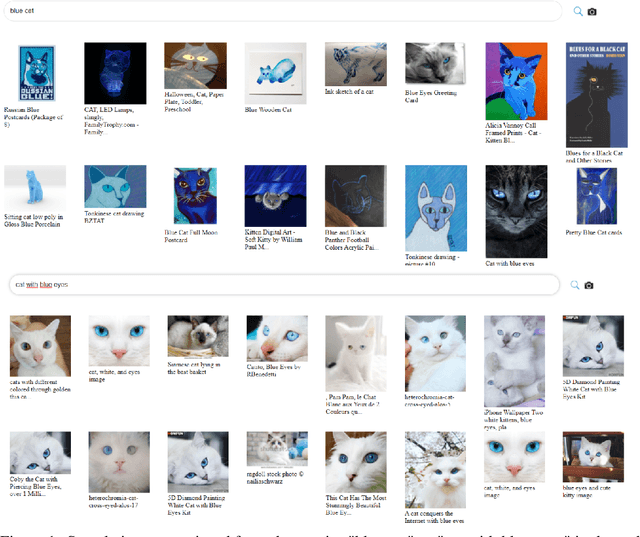

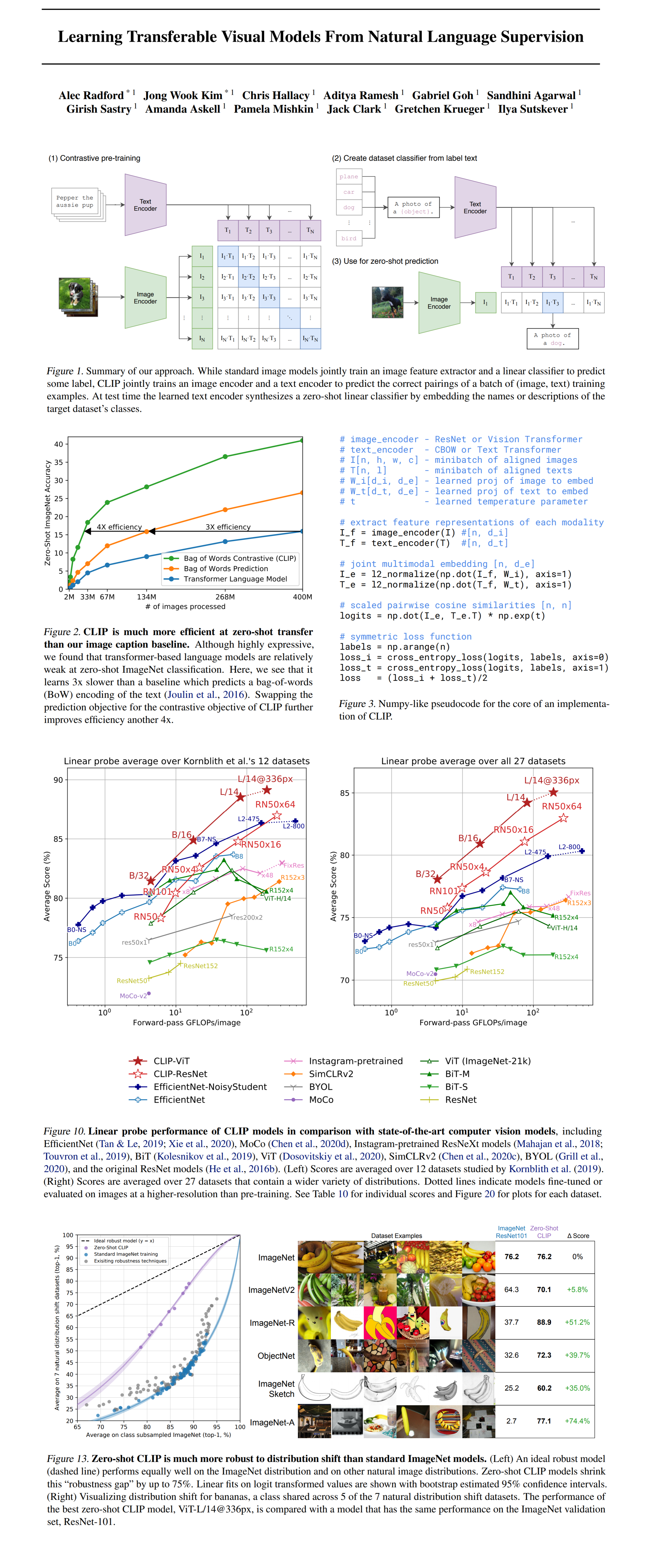

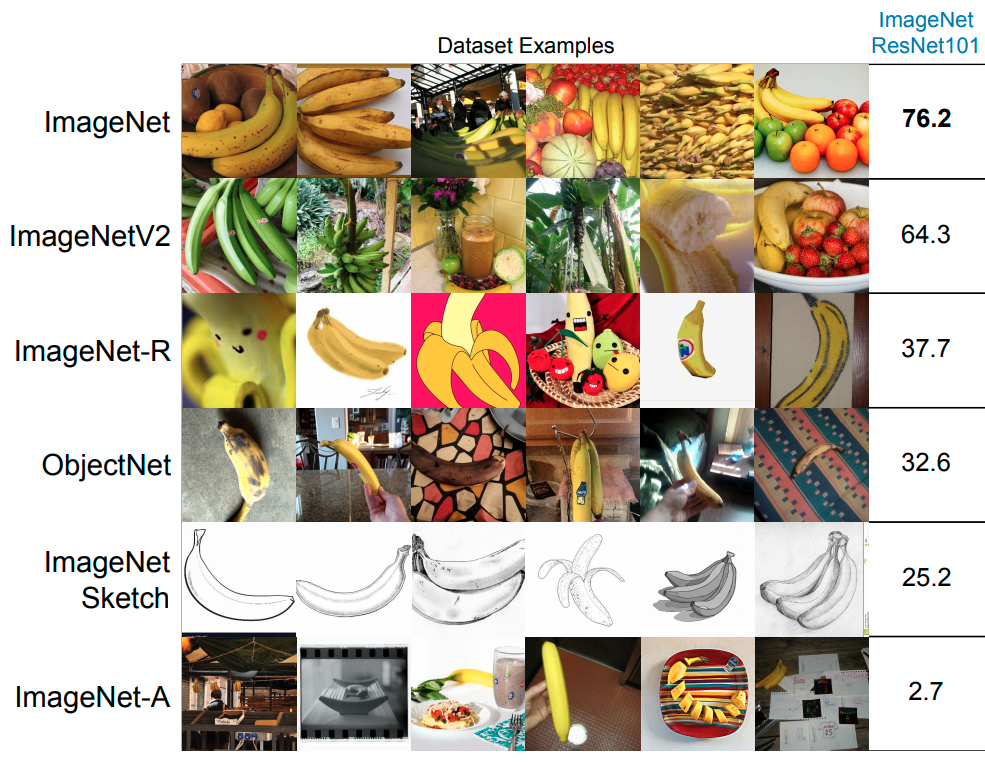

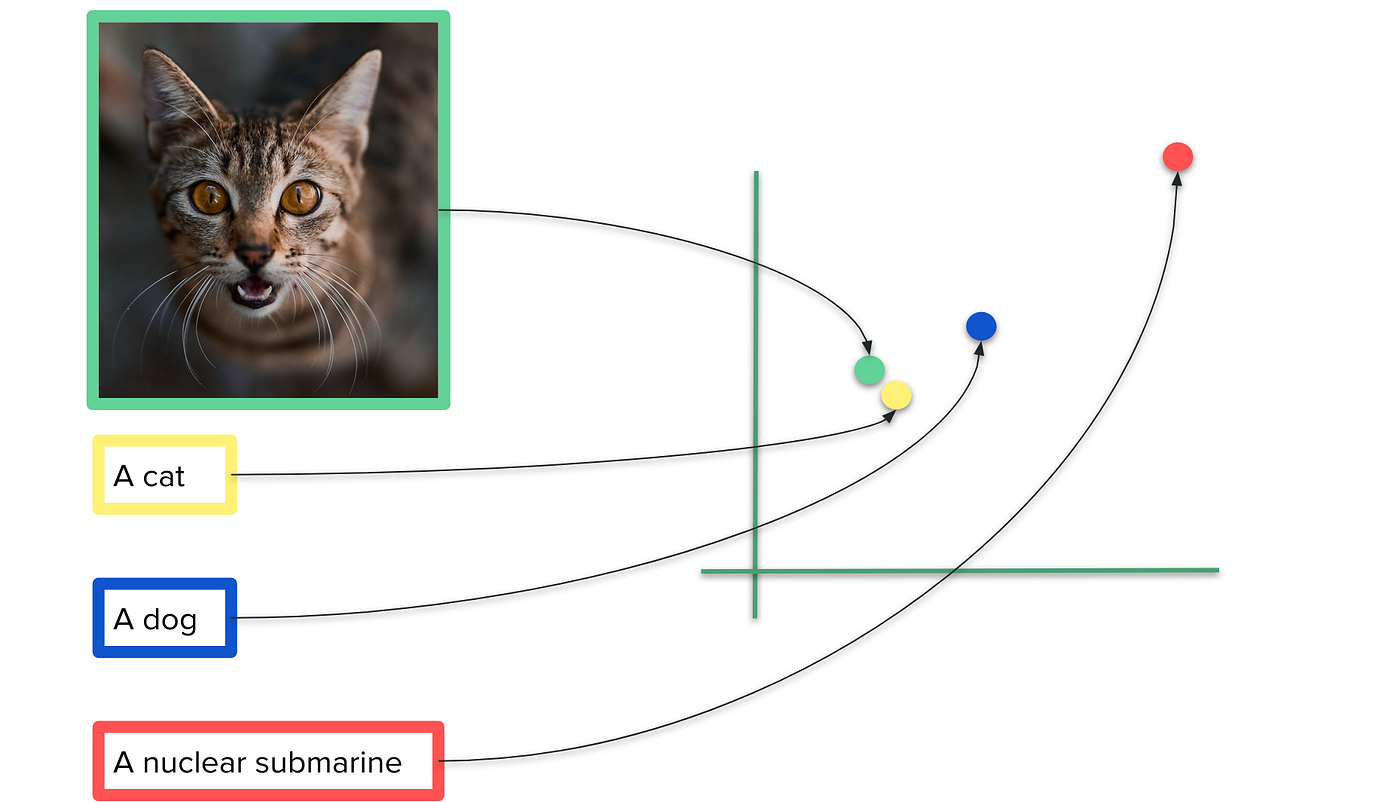

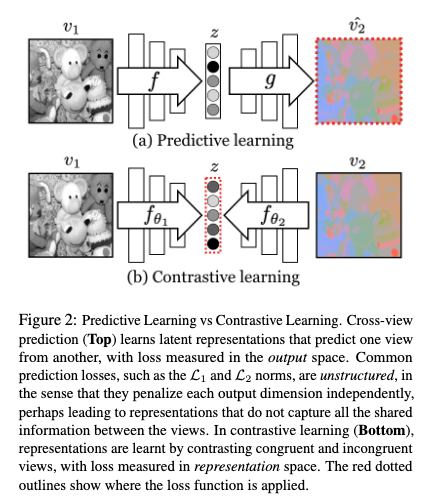

Understand CLIP (Contrastive Language-Image Pre-Training) — Visual Models from NLP | by mithil shah | Medium

Understand CLIP (Contrastive Language-Image Pre-Training) — Visual Models from NLP | by mithil shah | Medium

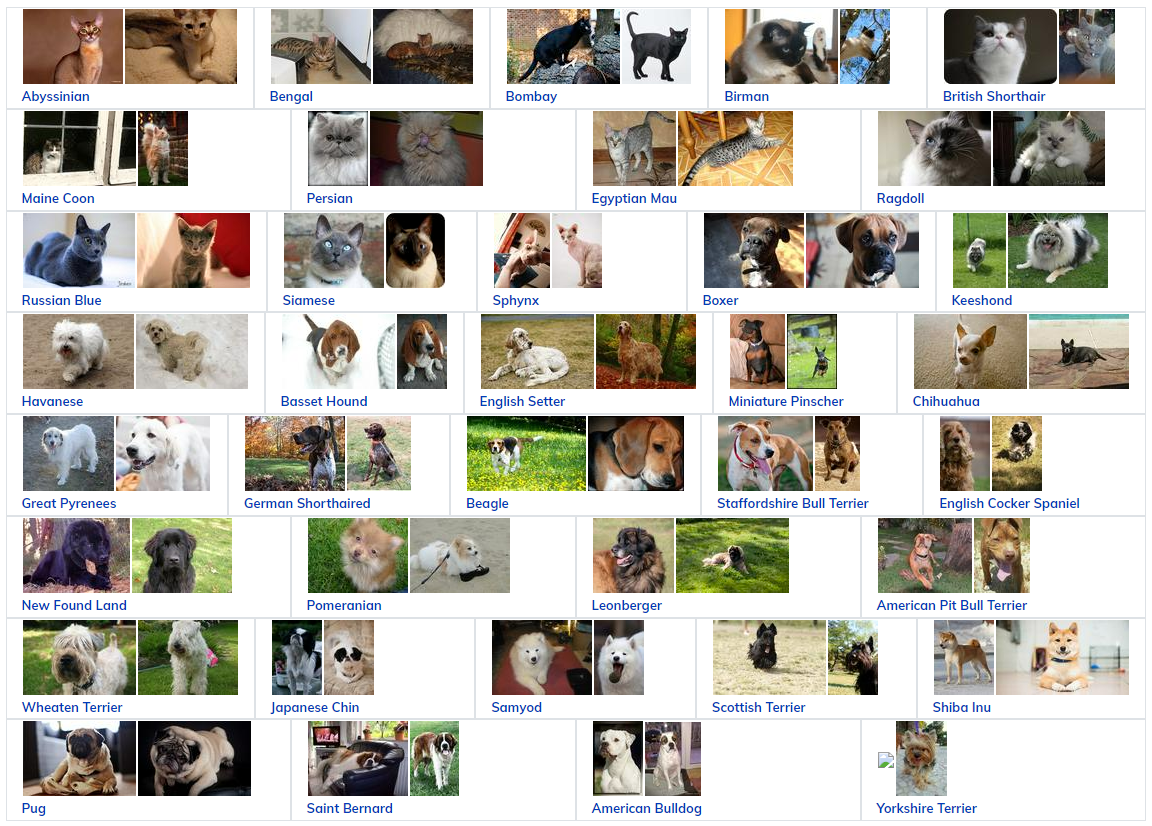

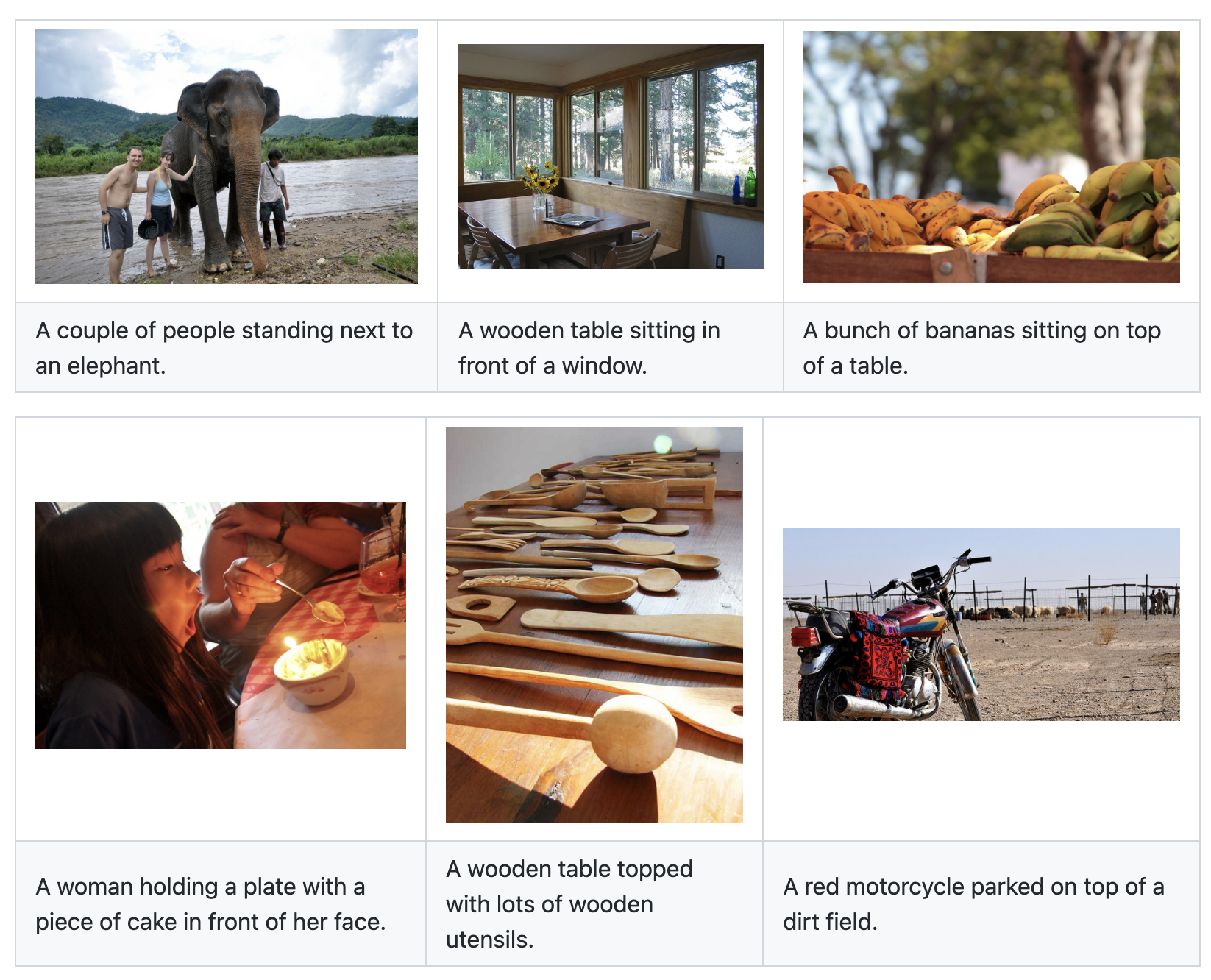

PDF) LAION-400M: Open Dataset of CLIP-Filtered 400 Million Image-Text Pairs | Romain Beaumont - Academia.edu